Setting up a real-time facial mocap inside a game engine can feel tedious, especially when you need access to all ARKit blendshapes, head rotation, and eye rotation without writing a full network layer yourself. GodotARKit, created by Jules Neghnagh–Chenavas, offers a simple way to connect Live Link Face to Godot and stream facial data into your scene with almost no setup.

It captures ARKit’s full blendshape stream over UDP and exposes everything through a singleton, letting you drive ARKit-ready models directly in-game using a minimal script. Since it works at runtime, it fits both real-time animation workflows and in-game mocap features.

What This Tool Does

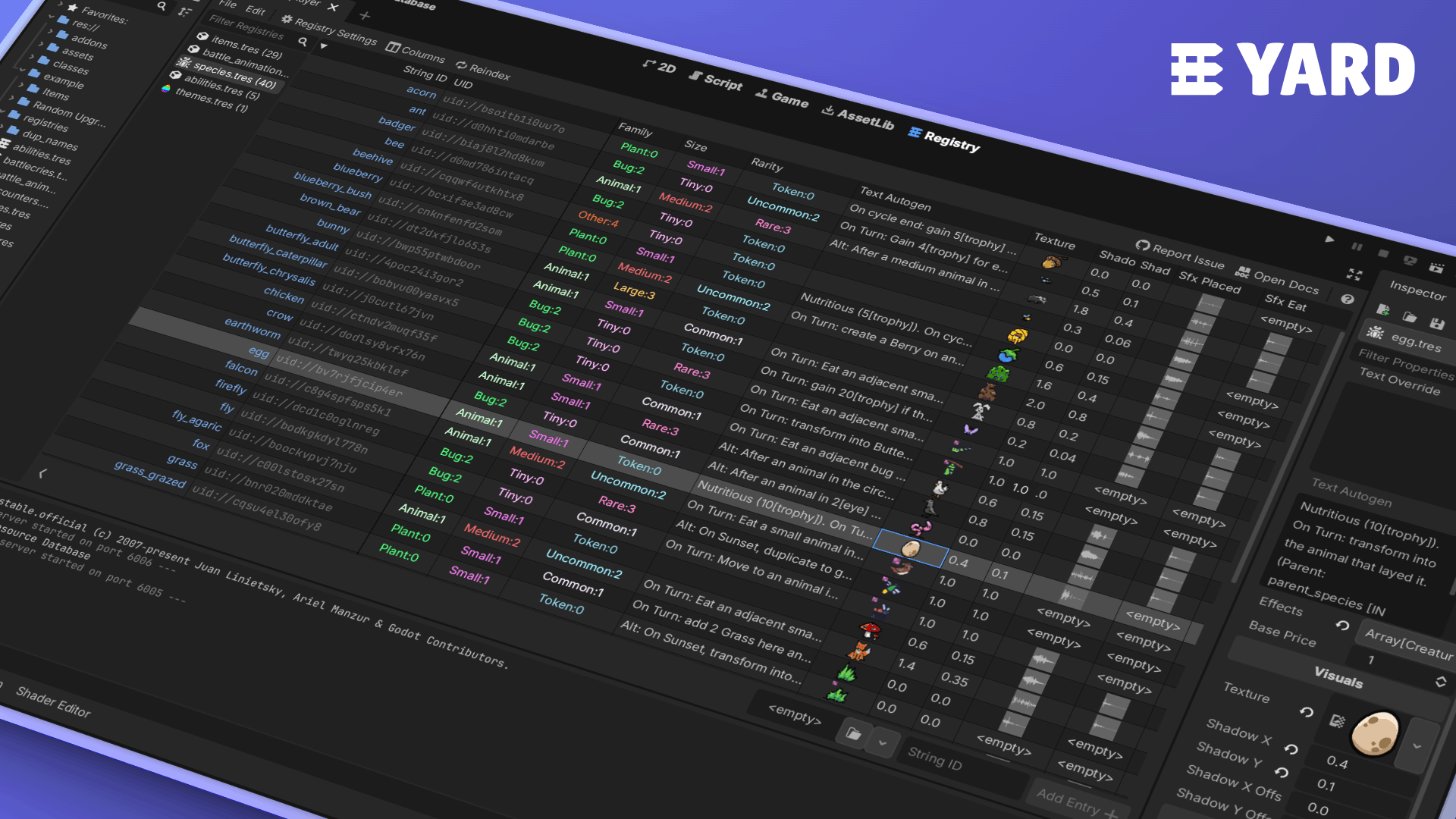

GodotARKit is a plugin for Godot 4 that receives ARKit facial motion capture data via UDP streaming. It integrates with Apple’s Live Link Face app, making all 52 standard ARKit blendshapes (plus head and eye rotation) available instantly through an autoloaded singleton. This allows you to animate ARKit-compatible characters in real time using any mesh that contains correctly named shape keys.

The workflow is intentionally simple:

- Point Live Link Face to your computer’s IP

- Set the port

- Enable the server in the addon panel

- Animate your model using one loop

The plugin is MIT-licensed and open source.

.png)

Main Features

- Real-time ARKit facial mocap streamed directly into Godot

- Live Link Face integration via UDP

- Exposes all 52 ARKit blendshapes, plus head rotation and eye rotation

- Autoload singleton for direct access to blendshape values

- Editor integration:

- Device list

- Live blendshape visualization

- Packet frame data

- Error feedback

- Works in-game, not only in the editor

- Simple example script included in the GitHub repository

How It Works

Once Live Link Face streams to your local IP and chosen port, GodotARKit reads the incoming UDP packets and organizes each device as a “subject.” Blendshapes and rotation values are accessible through the singleton, allowing you to animate models directly through a simple loop.

Below is the exact example script included by the creator. It demonstrates how to:

- Detect the first ARKit subject

- Read all blendshape values

- Apply them to a mesh

- Rotate the head

- Drive eye rotation via AnimationTree

(Code remains exactly as provided by the author.)

extends Node3D

@onready var face_mesh_instance: MeshInstance3D = $Armature/Skeleton3D/face

@onready var skeleton: Skeleton3D = $Armature/Skeleton3D

@export var neck_bone_name:String = 'head'

@onready var anim_tree: AnimationTree = $AnimationTree

var neck_bone:int

var base_neck_rot:Quaternion

func _ready() -> void:

ARKitSingleton._server.change_port(11111)

ARKitSingleton._server.start()

neck_bone = skeleton.find_bone(neck_bone_name)

base_neck_rot = skeleton.get_bone_pose_rotation(neck_bone)

func _process(_delta: float) -> void:

var first_subject: ARKitSubject

# Check if at least one subject (ARKit UDP service screaming at you) exists

if len(ARKitSingleton._server.subjects.keys()) == 0:

return

# Select the first subject like a brute

for subject: ARKitSubject in ARKitSingleton._server.subjects.values():

first_subject = subject

break

# Change each blendshape of your model

for i in range(first_subject.packet.number_of_blendshapes):

var blendshape_name: String = ARKitServer.blendshape_string_mapping[i]

var blendshape_value: float = first_subject.packet.blendshapes_array[i]

# The classic 52 blendshapes of the face

if i <= ARKitServer.BlendShape.TONGUE_OUT:

set_blend_shape(face_mesh_instance, blendshape_name, blendshape_value)

else:

break

# The blendshapes related to the head rotation

var head_yaw: float = first_subject.packet.blendshapes_array[ARKitServer.BlendShape.HEAD_YAW]

var head_pitch: float = first_subject.packet.blendshapes_array[ARKitServer.BlendShape.HEAD_PITCH]

var head_roll: float = first_subject.packet.blendshapes_array[ARKitServer.BlendShape.HEAD_ROLL]

skeleton.set_bone_pose_rotation(neck_bone, base_neck_rot * Quaternion.from_euler(Vector3(-head_pitch, -head_yaw, -head_roll)))

# The ones related to eyes rotation (It's hacky here, both eyes behave the same, so you can't be crosse-eyed). Do better

var left_eye_yaw: float = first_subject.packet.blendshapes_array[ARKitServer.BlendShape.LEFT_EYE_YAW]

var left_eye_pitch: float = first_subject.packet.blendshapes_array[ARKitServer.BlendShape.LEFT_EYE_PITCH]

var eyes_pos := Vector2(left_eye_yaw*PI/2, -left_eye_pitch*PI/2)

anim_tree.set('parameters/blend_position', eyes_pos)

static func set_blend_shape(mesh_instance: MeshInstance3D, blendshape_name: String, blendshape_value: float) -> void:

var new_name: String = lowercase_first_letter(blendshape_name)

var blend_shape_idx: int = mesh_instance.find_blend_shape_by_name(new_name)

if blend_shape_idx == -1:

push_warning("blendshape: ", new_name, " not found in node: ", mesh_instance)

return # Not found

mesh_instance.set_blend_shape_value(blend_shape_idx, blendshape_value)

# Because the blendshapes names on Unreal seems to be Uppercased I used Upperased ones in my enums. Meta says that it's not uppercased, but LLF use Uppercased ones in the debug settings, what to do?

static func lowercase_first_letter(text: String) -> String:

passUse Cases

GodotARKit works well for:

- Real-time facial animation inside Godot

- Live avatar systems

- Indie virtual production setups

- Mocap-driven prototyping

- Facial rig testing and debugging

- Rendering or gameplay features that react to live facial capture

Similar and Useful Tools

- GodotVR: This is a plugin aimed at ARCore support for Godot on Android: enabling mobile AR features via Google’s ARCore on Android devices.

Differences: While GodotARKit focuses on facial mocap via ARKit (iOS) and streaming of blend-shapes, godot_arcore is oriented toward AR environment/scene features (planes, tracking, Android support) rather than detailed facial capture.

- Rokoko Face Capture: This is a commercial solution that supports iOS (ARKit) and Android workflows, capturing facial expressions and streaming them to various 3D tools (Blender, Maya, Unity, Unreal) in real time.

Differences: While GodotARKit is a plugin specific to Godot and streams via UDP inside that engine, Rokoko Face Capture offers a broader ecosystem of software/hardware integration and supports multiple applications beyond Godot.

✨ GodotARKit is now available on the Godot Asset Library.

📘 Check out The Godot Shaders Bible, a complete guide to creating and optimizing shaders for 2D and 3D games, from basic functions and screen-space effects to advanced compute techniques.

📘 Check out The Godot Shaders Bible, a complete guide to creating and optimizing shaders for 2D and 3D games, from basic functions and screen-space effects to advanced compute techniques.

Showcase video off all the shaders we've made while writing the Godot Shaders Bible! A new update is coming soon! ✨ https://t.co/pTFh4XfXUt#GodotEngine #indiedev #3DCG pic.twitter.com/6z3pe0L0c8

— The Unity Shaders Bible (@ushadersbible) November 21, 2025