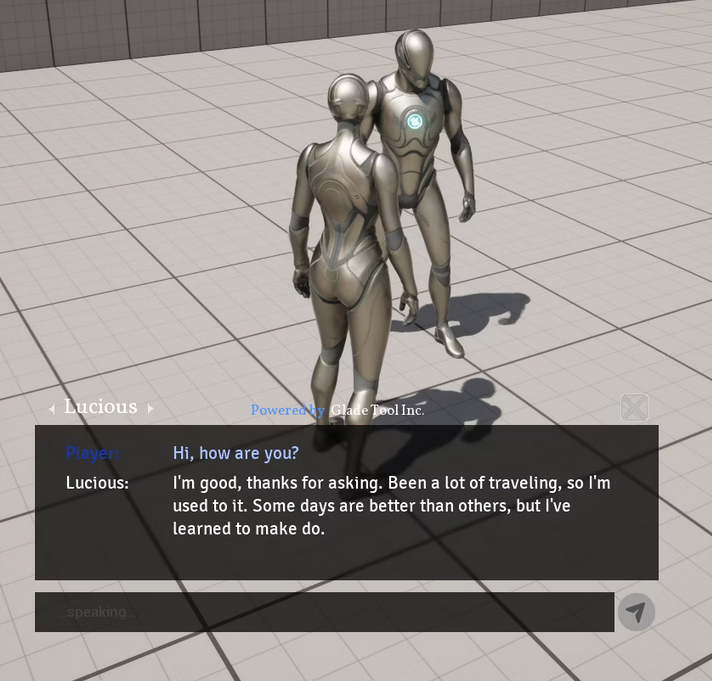

If you’ve ever experimented with AI-driven NPCs in Unreal Engine, you’ve probably noticed how most solutions rely heavily on cloud APIs, high latency, or subscription costs that scale poorly. GladeCore, developed by Glade Studio, approaches this challenge differently.

It’s a local, on-device framework that brings large language model interactions directly into your Unreal Engine projects. Players can hold dynamic, unscripted conversations with NPCs through voice or text, which is processed locally without external dependencies or per-use costs.

Released in October 2025, the plugin introduces a dialogue and speech pipeline powered by LLMs, Speech-to-Text, and Text-to-Speech integration. Each NPC’s personality, voice, and memory can be defined through simple data assets, giving you full creative control while maintaining low latency and strong performance.

Main Features

- Local LLM Dialogue System

Run natural language conversations entirely on-device. NPCs generate real-time responses powered by local LLM inference, without cloud processing or subscription costs.

- Speech Integration

Includes built-in Speech-to-Text for player input and Text-to-Speech for NPC dialogue. You can use local TTS systems or external ones like ElevenLabs for higher-quality voices.

- Data-Driven Personalities

NPC traits, backstories, and dialogue behavior are fully defined through Unreal Engine Data Assets. No scripting or external tools are required.

- Multiplayer Support

The plugin’s architecture is built with networking in mind, using client-server RPCs to manage communication. Multiplayer functionality isn’t available in the lower-cost tiers, so you can check the feature matrix to see which plan best fits their needs.

- Low Latency and Scalable Performance

All inference runs locally, which means no need for a server or API. It’s designed to scale to dozens or even hundreds of continuous NPC interactions.

- Engine Integration

GladeCore is built on Unreal’s native frameworks and integrates into existing projects through a simple folder-based setup. Developers need to copy the plugin files into their project directory and review the included licensing details before distributing builds, since the system relies on a locally hosted Llama-based model. It currently supports Windows for both development and deployment.

.jpg)

.jpg)

In case you’re interested in trying it out, Glade Studio offers a free demo for Unreal Engine and a Unity version of the plugin is currently in development.

Other Alternatives

- Inworld AI for Unreal: Full AI character platform with a UE plugin for unscripted dialogue, context, and behavior. Cloud-hosted inference with authoring tools and analytics.

Differences: Inworld runs in the cloud with managed tools and analytics, while GladeCore runs entirely on-device with no external services.

- Convai for Unreal: Conversational AI characters for UE with speech, actions, MetaHuman integration, and multi-language support. Cloud inference via the Convai service and SDK.

Differences: Convai emphasizes cloud services and action planning. GladeCore emphasizes fully local, cost-stable inference with no network dependency (at least for the dialogue).

- Llama-Unreal: An Unreal plugin that integrates llama.cpp to run local LLMs inside UE projects. Suits teams that want maximum control and open-source workflows.

Differences: Llama-Unreal provides low-level access to local models but expects more engineering effort. GladeCore is a turnkey on-device solution with NPC data assets, with optional voice (via APIs or local) and better UX in the Editor.

✨ GladeCore is now available on Fab.

📘 Coming soon: Unreal Engine Blueprints for Beginners, a practical guide to building gameplay visually with Blueprints, covering UI, animations, game logic and mini-game projects.

📘 Coming soon: Unreal Engine Blueprints for Beginners, a practical guide to building gameplay visually with Blueprints, covering UI, animations, game logic and mini-game projects.